As modern applications continue to evolve, distributed systems have become a foundational part of the technology stack. Whether you’re building cloud-native applications, microservices architectures, or simply working with distributed databases, understanding the core concepts behind distributed systems is essential for every developer. Below are five core concepts that every developer should know to effectively design, develop, and troubleshoot distributed systems.

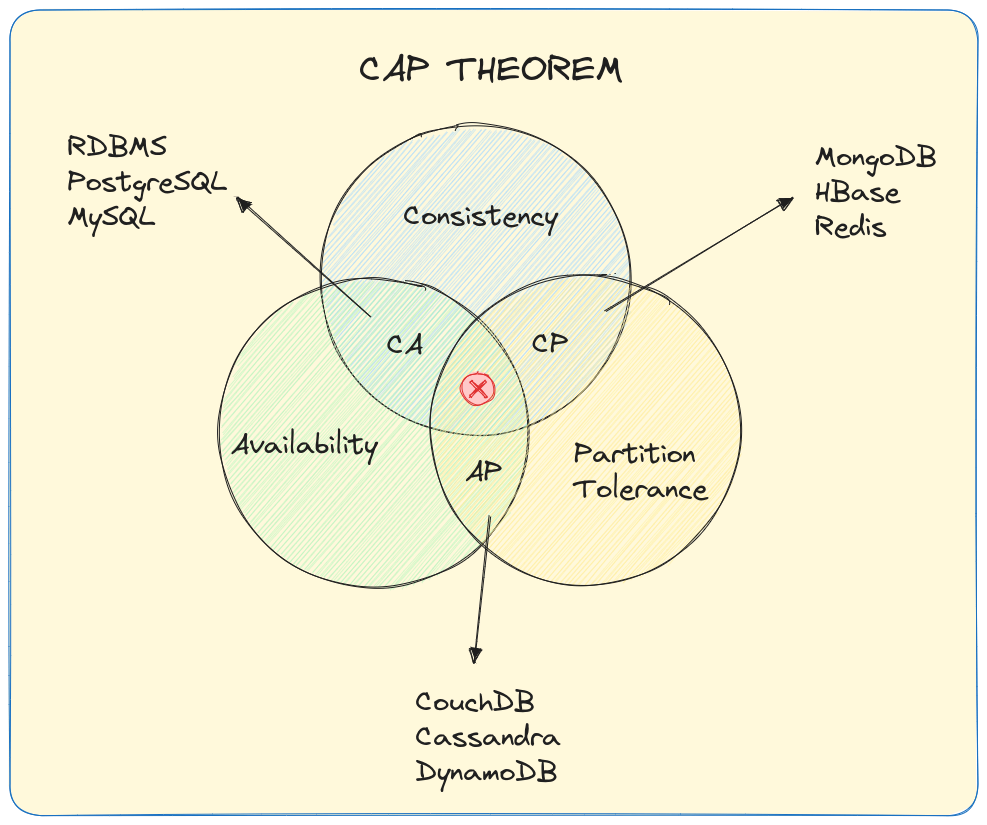

1. Consistency, Availability, and Partition Tolerance (CAP Theorem)

The CAP Theorem is a fundamental principle that governs the behavior of distributed systems. Formulated by computer scientist Eric Brewer in 2000, it asserts that a distributed system can only guarantee two of the following three properties:

Consistency: Every read operation will return the most recent write, ensuring that all nodes in the system have the same data at any given time. This is formally known as linearizability, where operations appear to execute in a sequential order.

Availability: Every request will receive a response (whether it is successful or not). The system is available for reads and writes, even in the face of failures.

Partition Tolerance: The system continues to function even if there are network partitions that prevent some nodes from communicating with others.

The CAP Theorem helps developers understand the trade-offs involved in designing distributed systems. In practice, systems have to choose which properties to prioritize based on the application’s requirements:

- CP Systems (Consistent and Partition Tolerant): Traditional relational databases like PostgreSQL with synchronous replication prioritize consistency. When network partitions occur, some nodes become unavailable to maintain consistency.

- AP Systems (Available and Partition Tolerant): NoSQL databases like Apache Cassandra and Amazon DynamoDB prioritize availability. During network partitions, all nodes remain available but may return stale data.

- CA Systems (Consistent and Available): Single-node databases can achieve both consistency and availability but cannot handle network partitions, making them unsuitable for distributed environments.

Real-world Example: MongoDB allows you to configure your read and write concerns to lean towards either consistency or availability based on your requirements. With {w: "majority", j: true} write concern, MongoDB ensures data is written to a majority of nodes before acknowledging the write, prioritizing consistency.

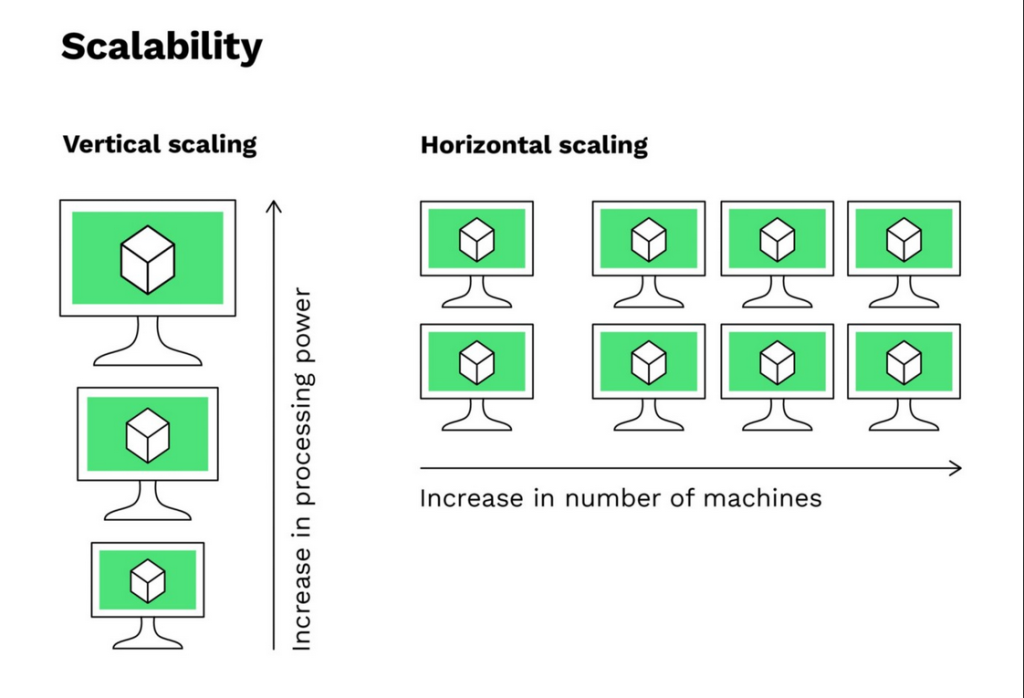

2. Scalability

Scalability refers to a system’s ability to handle an increasing amount of load or traffic. In the context of distributed systems, scalability is particularly important because as more resources (servers, nodes, etc.) are added, the system should seamlessly expand without compromising performance.

There are two main types of scalability:

- Vertical Scaling (Scaling Up): This involves adding more resources (CPU, RAM, etc.) to an existing server. However, this approach has its limits, as it is constrained by the hardware capabilities of the server.

// Example: Increasing RAM allocation for a Redis cache

redis-server --maxmemory 8gb // Increased from 4gb

- Horizontal Scaling (Scaling Out): This involves adding more machines or nodes to the system. Horizontal scaling is a fundamental feature of distributed systems because it allows them to handle large-scale, global workloads by distributing the load across many machines.

Real-world Example: Netflix uses Amazon EC2 Auto Scaling Groups to automatically adjust their service capacity based on traffic patterns. During peak viewing hours, their system automatically provisions additional servers to handle the increased load, and scales back during off-peak hours to optimize costs.

Implementing horizontal scaling requires careful consideration of:

- Statelessness: Services should be designed to function without maintaining client session state.

- Service Discovery: Systems like Consul or etcd help services find each other as the number of instances changes.

- Load Balancing Algorithms: Round-robin is simple but might not be optimal; alternatives like least connections or weighted response time can improve performance.

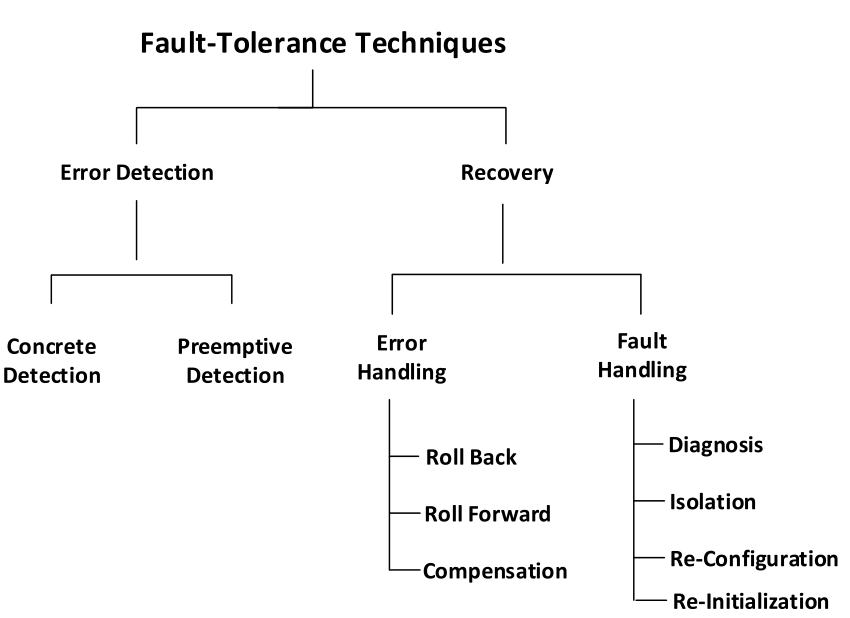

3. Fault Tolerance

In a distributed system, failures are inevitable. Servers can go down, network connections can fail, and services can become unavailable. Fault tolerance is the ability of a system to continue operating correctly even when some components fail.

Key strategies for fault tolerance include:

- Replication: Making copies of critical data across multiple servers or nodes ensures that if one instance fails, others can take over, preventing downtime.

- Failover Mechanisms: Systems should automatically detect failures and switch to a backup system or server to minimize service disruption.

// Example: Simple retry mechanism in Node.js

async function fetchWithRetry(url, retries = 3, backoff = 300) {

try {

return await fetch(url);

} catch (error) {

if (retries <= 0) throw error;

await new Promise(resolve => setTimeout(resolve, backoff));

return fetchWithRetry(url, retries - 1, backoff * 2);

}

}

- Graceful Degradation: Instead of crashing when a failure occurs, a well-designed distributed system should continue to operate at a reduced capacity.

Real-world Example: Amazon S3 automatically stores data across a minimum of three availability zones within a region. If an entire data center fails, the service continues to function because the data is replicated across multiple physically separated facilities.

Did you know? Circuit breakers, popularized by Michael Nygard in “Release It!”, prevent cascading failures by automatically stopping requests to failing services. The Netflix Hystrix library (now maintained as Resilience4j) implements circuit breakers with three states:

- Closed: Requests flow normally

- Open: Requests are rejected immediately

- Half-open: A limited number of test requests are allowed through to check if the service has recovered

4. Latency and Network Communication

In distributed systems, latency is the time it takes for data to travel between different components. Network communication between nodes can introduce delays, especially if the nodes are geographically distributed. Minimizing latency is critical for achieving high-performance systems.

Strategies to reduce latency include:

- Data Locality: Placing data closer to the consumers or processing nodes can reduce the time it takes to retrieve information.

- Caching: Caching frequently accessed data on the client-side or at intermediate nodes can significantly reduce latency for repeat requests.

- Asynchronous Communication: Instead of waiting for a response before continuing with other tasks, asynchronous communication allows a system to process multiple tasks concurrently, reducing waiting times.

- Compression and Efficient Serialization: Minimizing the size of the data being sent over the network can also help reduce latency.

Real-world Example: Google Cloud CDN places content in edge locations around the world, reducing latency by serving content from a location closest to the user. For a website with global visitors, this can reduce page load times by hundreds of milliseconds.

Did you know? The speed of light imposes a physical minimum on network latency. Light travels about 300,000 km/s in a vacuum, but only around 200,000 km/s in fiber optic cables. This means there’s approximately 1ms of latency per 200km of fiber distance, creating a fundamental constraint for globally distributed systems.

5. Distributed Consensus

Distributed consensus is the process of ensuring that all nodes in a distributed system agree on a single value or decision, even in the presence of failures or network partitions. It is a critical concept for systems that require coordination and consistency, such as distributed databases and replicated state machines.

Common algorithms used for achieving consensus in distributed systems include:

- Paxos: A consensus algorithm designed to work in environments where nodes may fail or become unavailable. It ensures that a system can reach agreement even when some nodes are unreliable. Paxos operates in two phases:

- Prepare phase: A proposer sends a proposal number to acceptors

- Accept phase: If a majority of acceptors approve, the value is chosen

- Raft: A more understandable alternative to Paxos, Raft is commonly used in distributed systems to ensure that all nodes reach consensus on the same log entries, which is vital for maintaining consistency in a distributed database or service. Raft simplifies consensus by:

- Electing a leader who handles all client requests

- Using a heartbeat mechanism to maintain leadership

- Ensuring log entries flow in only one direction (from leader to followers)

- Zab (Zookeeper Atomic Broadcast): Used by Apache ZooKeeper, Zab is a protocol for ensuring that all nodes agree on the same sequence of transactions or commands.

Real-world Example: etcd, the distributed key-value store that powers Kubernetes, uses the Raft consensus algorithm to maintain a consistent view of the cluster state across multiple nodes. This ensures that even if some nodes fail, the Kubernetes control plane can continue making scheduling decisions based on consistent data.

Did you know? The FLP impossibility result (named after Fischer, Lynch, and Paterson) proves that in an asynchronous system, no consensus algorithm can guarantee both safety and liveness if even one node might fail. This theoretical result explains why practical consensus algorithms like Raft make assumptions about timing to achieve consensus.

Conclusion

Distributed systems are complex, but understanding these core concepts is necessary to building resilient, scalable, and high-performance applications.

Test Your Knowledge

Question 1: System Design Scenario

You are designing a distributed e-commerce platform that needs to handle Black Friday sales with millions of concurrent users. The system must accurately track inventory to prevent overselling while maintaining high availability during traffic spikes. Which approach would be most appropriate?

A) Use a single relational database with vertical scaling to ensure strong consistency of inventory counts.

B) Implement an eventually consistent NoSQL database with optimistic concurrency control and conflict resolution mechanisms.

C) Use a distributed database with CP properties for inventory management and AP properties for product browsing.

D) Implement a monolithic application with multiple read replicas and a single write master.

Question 2: System Design Scenario

Your team is building a globally distributed real-time collaborative document editing system similar to Google Docs. Which combination of techniques would best address the challenges of this system?

A) Use strong consistency protocols with synchronous replication across all global data centers.

B) Implement Conflict-free Replicated Data Types (CRDTs) with eventual consistency and use operation transforms to handle concurrent edits.

C) Centralize all document operations through a single master server to prevent conflicts.

D) Use distributed locks with lengthy timeouts to ensure only one user can edit a section at a time.

Answers

Question 1: Correct Answer – C

Use a distributed database with CP properties for inventory management and AP properties for product browsing.

Explanation:

This scenario presents a classic distributed systems challenge that requires different consistency models for different parts of the application:

- Inventory management requires strong consistency (CP) to prevent overselling. In an e-commerce platform during high-traffic events like Black Friday, accurately tracking inventory is business-critical. We must ensure that when an item is sold, all nodes in the system immediately reflect the updated inventory count.

- Product browsing can tolerate eventual consistency (AP) to maximize availability during traffic spikes. Users browsing products can occasionally see slightly outdated information (like an item being shown as available for a few seconds after it sold out) without major business impact.

Why the other options are incorrect:

- Option A (Single relational database with vertical scaling): This approach would create a single point of failure and likely couldn’t scale to handle “millions of concurrent users.” Vertical scaling has physical hardware limits and doesn’t provide the partition tolerance needed in a distributed system.

- Option B (Eventually consistent NoSQL with optimistic concurrency): While this provides excellent availability and partition tolerance, the eventual consistency model across all aspects of the system could lead to overselling inventory, which violates a key requirement.

- Option D (Monolithic application with read replicas): This approach would improve read performance but wouldn’t address the fundamental scalability issues of a monolithic architecture or provide partition tolerance.

Real-world example: Amazon’s e-commerce platform uses different database systems with different consistency guarantees for different aspects of their service. Their inventory and order processing uses strongly consistent databases, while product recommendations and reviews can use eventually consistent systems.

Question 2: Correct Answer – B

Implement Conflict-free Replicated Data Types (CRDTs) with eventual consistency and use operation transforms to handle concurrent edits.

Explanation:

For a globally distributed collaborative editing system like Google Docs, the biggest challenges are:

- Low latency for real-time collaboration: Users expect immediate feedback when typing

- Handling concurrent edits: Multiple users may edit the same section simultaneously

- Working across global distances: Speed-of-light limitations make strong consistency impractical

CRDTs combined with operational transforms solve these challenges by:

- Allowing independent local updates: Each user’s edits are applied locally first (immediate feedback)

- Ensuring conflict-free merging: CRDTs mathematically guarantee that concurrent operations can be merged without conflicts

- Supporting eventual consistency: The system converges to a consistent state without requiring immediate synchronous communication

Why the other options are incorrect:

- Option A (Strong consistency with synchronous replication): This would create unacceptable latency for real-time editing. Users in Australia would have to wait hundreds of milliseconds for confirmation from data centers in North America before seeing their keystrokes appear.

- Option C (Centralize through a single master): This creates a single point of failure and significant latency for users far from the master server. It doesn’t scale for a global application.

- Option D (Distributed locks with timeouts): This would create a poor user experience by blocking concurrent edits to the same section, defeating the purpose of real-time collaboration.

Real-world example: Google Docs uses operational transforms to handle concurrent edits in a way that preserves user intent while ensuring all clients eventually reach the same document state, without requiring strict consistency protocols that would introduce latency.