Caching is an extremely important concept in any computer system. It comes into play in the nitty gritty of the hardware in your computer and in huge distributed systems that span the world. This article explains core caching fundamentals simply.

This is the first part of SWE Quiz’s Caching Mastery Roadmap.

This article delves into the basics of caching, discussing its purpose, the trade-offs involved, various types of caches, and the distinctions between server-side and client-side caching.

One software refresher a week. All free.

Want to keep your software and system design fundamentals sharp?

Subscribe now. Only three minutes a week. No spam, totally free.

What is Caching?

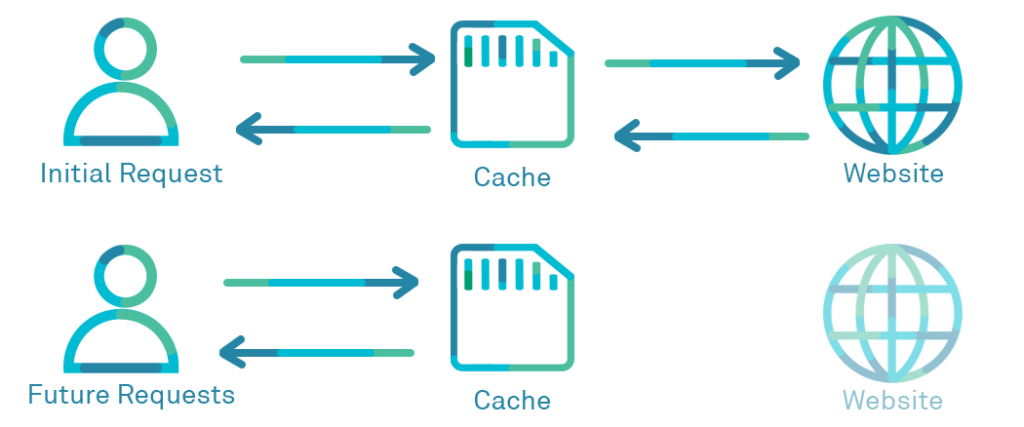

Caching is the process of storing copies of files in a temporary storage location, known as a cache. The temporary storage location is faster to access than the underlying slower storage layer. The primary goal of caching is to increase data retrieval performance by reducing the need to access the underlying slower storage layer. It involves keeping a subset of data – typically the most frequently accessed or important data – in a faster storage system.

Why Caching Exists

The primary reason for caching is to improve performance. When data is cached, it can be accessed much faster than retrieving it from the primary storage. This speed-up is crucial in many applications, from web browsing, where it accelerates page loading times, to database management, where query responses become faster.

An analogy: Imagine a high-end restaurant called Google.com that receives lots of customers, who order food (web searches). Instead of cooking every dish from scratch, the chef keeps the most popular dishes (or searches) ordered readily available in the oven, which is the cache. Caching works similarly. It’s the practice of storing frequently accessed data in a temporary holding area called a cache, closer to the point of request, for quicker retrieval. Think of it as a digital oven, holding copies of web pages, database queries, or application resources readily at hand.

Other benefits include faster loading times (customers get their pages faster), improved efficiency, and reduced loads on heavier processes (less load on the server).

When an order comes through and the food item already exists in the oven, this is called a cache hit.

When it doesn’t exist, this is a cache miss.

A system operates more effectively with a higher frequency of cache hits. The success of a cache is quantified by the cache hit ratio, calculated as the number of cache hits divided by the total number of cache requests. A higher ratio signals better system performance.

Some important points to note:

- Cache systems only accommodate a portion of the entire dataset.

- Caching systems usually exist in memory, which is much faster than disk, which is where databases are stored. Memory is costlier than disk space, so cache systems usually are much smaller than storage systems.

- Caching is necessary for high-traffic websites and applications.

Caching is a common concept in software engineering interviews.

SWE Quiz is the perfect way to test yourself and fill in any gaps in your software knowledge.

Trade-offs of Caching: Latency vs. Consistency

Caching inherently involves a trade-off between latency and consistency. Remember those copies in the cache? What happens when the original data updates? Here’s where the trade-off arises:

- Caching reduces latency by allowing data to be accessed more quickly. The degree of latency reduction depends on various factors, including cache size and the algorithm used for data retrieval and replacement.

- Caching reduces data consistency between the cache and the underlying primary storage. When data changes in the primary storage, the cached copy can become outdated, leading to potential discrepancies. Imagine seeing yesterday’s news headlines instead of breaking news!

Managing this trade-off involves strategies like cache invalidation, where outdated data is removed or updated in the cache, and choosing appropriate caching algorithms that balance speed and freshness of data.

Finding the sweet spot between latency and consistency depends on the specific data. Static content like website images can tolerate some staleness for optimal speed, while financial transactions demand real-time accuracy.

Different Types of Caches

The most common types of caches are:

- Page Cache: In operating systems, page caching is used to store pages of memory. This type of cache helps in quickly retrieving memory pages and reduces the time spent reading from or writing to a disk. (Generally, system design interviews don’t cover this as much, unless you are interviewing for a database-related team.)

- Content Cache: Used primarily in web applications to store web resources like HTML pages, images, videos, and scripts. It improves the user experience by reducing load times for frequently accessed web content.

- Data Cache: Common in database systems, data caching involves storing query results or frequently accessed database records. It speeds up database queries by avoiding redundant data retrieval operations.

- Query Cache: Specific to database systems, a query cache stores the result set of a query. Subsequent identical queries can be served directly from the cache, greatly improving query response time.

However, caches go much deeper than just these. You can categorize caches by data type, location, consistency, and more.

By Data Type:

- Content caches: These store static files like images, videos, scripts, and stylesheets, accelerating website loading and reducing server load.

- Page caches: These hold entire web pages, enabling lightning-fast reloads and making browsing feel buttery smooth.

- Data caches: These save database queries and results, offering quick data access within applications, especially for frequently-used operations.

- Query caches: These remember previous searches and their outcomes, eliminating redundant processing and boosting search efficiency.

- Object caches: These store complex data structures like objects and entities, accelerating access in application frameworks and programming languages.

By Location:

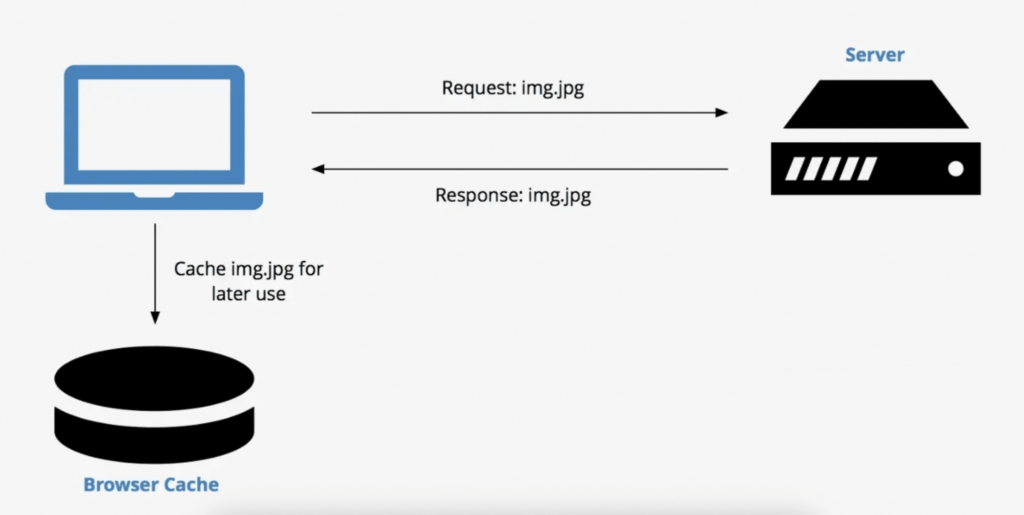

- Browser cache: Each web browser maintains its own cache, storing specific files for the websites you visit frequently. This personalizes your browsing experience and speeds up repeated visits.

- Operating system cache: Your operating system also has a cache for frequently accessed files like system libraries and application data, improving overall system performance.

- Application cache: Many applications implement their own internal caches for specific data related to their functionality, further optimizing their performance.

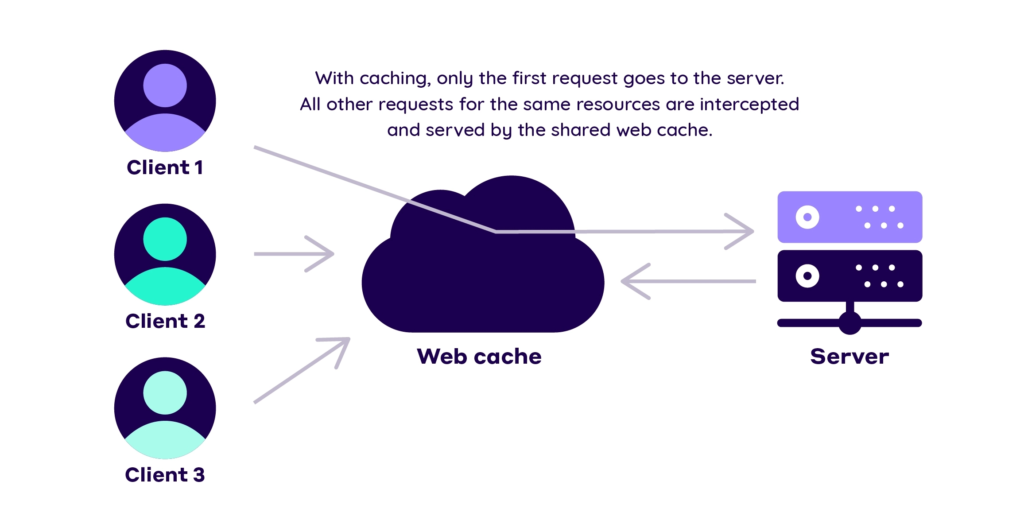

- Network cache: Routers and other network devices can also cache data, minimizing traffic over longer distances and speeding up web browsing for multiple users.

- Content Delivery Network (CDN) cache: CDNs strategically place servers around the globe, each equipped with a cache to deliver content closer to users, significantly reducing latency for geographically diverse audiences.

- L1, L2, L3 Caches: These are levels of cache memory in a computer’s architecture, with L1 being the fastest and smallest, followed by the larger and slightly slower L2 and L3 caches. They are used to store data and instructions that the CPU is likely to need soon.

By Consistency Model:

- Write-through cache: Updates made to the cached data are immediately reflected in the original source, ensuring consistency but sacrificing some write performance.

- Write-back cache: Updated data is written back to the source periodically or when the cache reaches capacity, providing better write performance but potentially leaving stale data in the cache for a short time.

- No-write cache: This cache only reads data, improving read performance and simplifying consistency issues, but limiting its usefulness for scenarios requiring updates.

Other Unique Caches:

- Opcode cache: Used in virtual machines and just-in-time (JIT) compilers, this cache stores compiled machine code for frequently executed instructions, boosting processor performance.

- Instruction cache: In processors, this cache stores recently fetched instructions, reducing the need to retrieve them from slower memory again, enhancing instruction execution speed.

- Translation Lookaside Buffer (TLB): This cache speeds up virtual memory address translation, a crucial step in accessing data in memory.

Server-side vs Client-side Caching

- Server-side Caching: This occurs on the server where the data originates. Examples include database caching and web server caching. Server-side caching benefits all users accessing the server, as it reduces the server’s load and improves response times.

- Client-side Caching: Happens on the client-side, such as in a web browser or a user’s application. It stores copies of files that the client (such as the user’s phone) accesses, reducing the need to repeatedly request the same data from the server. This type of caching is personalized and specific to the user’s interaction with the application.

You’ve successfully completed Part 1 of Caching Fundamentals.

Now is a great time to test your knowledge.

Caching is a common concept in software engineering interviews.

SWE Quiz is the perfect way to test yourself and fill in any gaps in your software knowledge.

Keep reading to the next in the series: Caching Policies and Algorithms Explained Simply

One software refresher a week. All free.

Want to keep your software and system design fundamentals sharp?

Subscribe now. Only three minutes a week. No spam, totally free.